Configuring AI Evaluator Nodes in Workflow Automation

The AI Evaluator node helps analyze the actions and outcomes within workflows. It provides valuable insights and actionable recommendations to optimize workflow performance, enhance efficiency, and ensure the best possible results.

To get started with the AI Evaluator node, follow these steps:

- Add the AI Evaluator Node: Select the AI model details (e.g., AWS Bedrock for evaluation).

- Provide a Prompt: Enter a description for the evaluation task.

- Select the AI Model: Choose the AWS Bedrock model (or OpenAI model) you want to use for the evaluation.

- Save Changes: After configuring, save your settings.

This configuration allows the AI Evaluator to analyze actions and outcomes within your workflow, providing insights to optimize performance and ensure the best results.

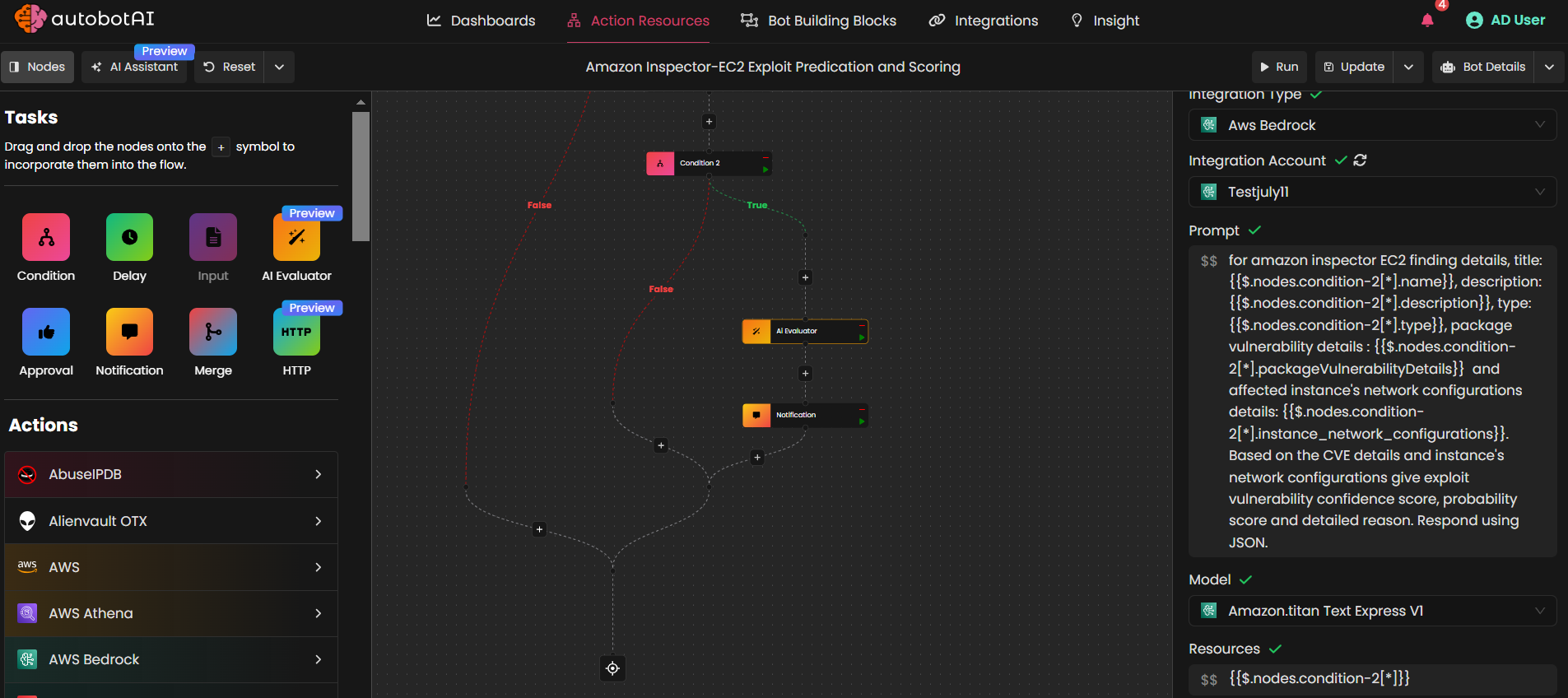

How to Configure an AI Evaluator Node

Follow these steps to set up and configure the AI Evaluator node in your workflow:

1. Add the AI Evaluator Node

Drag the AI Evaluator node from the node library into your workflow canvas.

2. Open Configuration

Click on the AI Evaluator node to open its configuration panel.

3. Configure Evaluation

- Add Node: Place the AI Evaluator node into your bot workflow.

- Select AI Model: In the "Integration Type" field, choose an AI model, such as AWS Bedrock or OpenAI.

- Provide the Prompt:

Enter a detailed prompt that describes the evaluation task. For example:For Amazon Inspector EC2 findings, Title: {{$.nodes.condition-2[].name}}, Description: {{$.nodes.condition-2[].description}},Package Vulnerability Details: {{$.nodes.condition-2[].packageVulnerabilityDetails}}, Affected Instance Network Configurations: {{$.nodes.condition-2[*].instance_network_configurations}}. Based on the CVE details and instance network configurations, provide an exploit vulnerability confidence score, probability score, and detailed reasoning. Respond in JSON format.

- Select AI Model Details:

Choose the AI model you'd like to use for the evaluation. For example:

- AWS Bedrock: AWS Titan, Meta, Llama3, Llama2, Mistral

- OpenAI: GPT-3.5, GPT-4, DALL·E, Babbage and more.

-

Select Resource:

Click$$to select a resource from a previous node in the workflow. -

Save Changes

Once you have configured all the necessary settings, click Save Changes.

4. Test the AI Evaluator Node

Use the Run button within the node interface to test the AI Evaluator node. This will allow you to evaluate the security threats or other specified tasks, ensuring it provides accurate insights and recommendations.

Outcome

By configuring the AI Evaluator node, you can gain valuable insights into workflow performance and receive actionable suggestions to enhance efficiency and outcomes.